The robots can understand us now

The definitive guide to Huawei ML Kit’s Automatic Speech Recognition

Hi, I’m your father, Luke. No, wait. This is not the right article to do it 😄. Besides some silly joke, welcome to another article about Huawei’s Machine Learning Kit. In this episode, I will recap the Automatic Speech Recognition (ASR)’s usage in approximately 1000 or fewer words. At least, this is my goal for this article.

For guys who are like-minded to Linus Torvalds’ famous quote “Talk is cheap, show me the code” or at least got into this mood sometimes like me, you’re not forgotten. Scroll all the way down to the end of the article, and then you will see the holy GitHub repository to tear it down 😉. Also, before we start, I would like to mention again that this guide and repository will be updated as the new version of the ASR releases. So, you might see different new capabilities, language supports, or even new API usage in your future readings. Actually, this would be the same for the whole Mr.Roboto series while I’m around and rolling in the Medium.

## Automatic Speech Recognition

Automatic speech recognition (ASR) can recognize speech not longer than 60s and convert the input speech into text in real time. This service uses industry-leading deep learning technologies to achieve a recognition accuracy of over 95%.

You will see another brief and nice description of ASR from Huawei docs. These guys know how to explain things in a short and neat way, unlike me. I always tend to explain things in a wild and strange way. Things can get messy while writing it. So, please prepare for a wild and weird reading journey 😃.

Let’s see the capabilities of ASR first;

### Capabilities

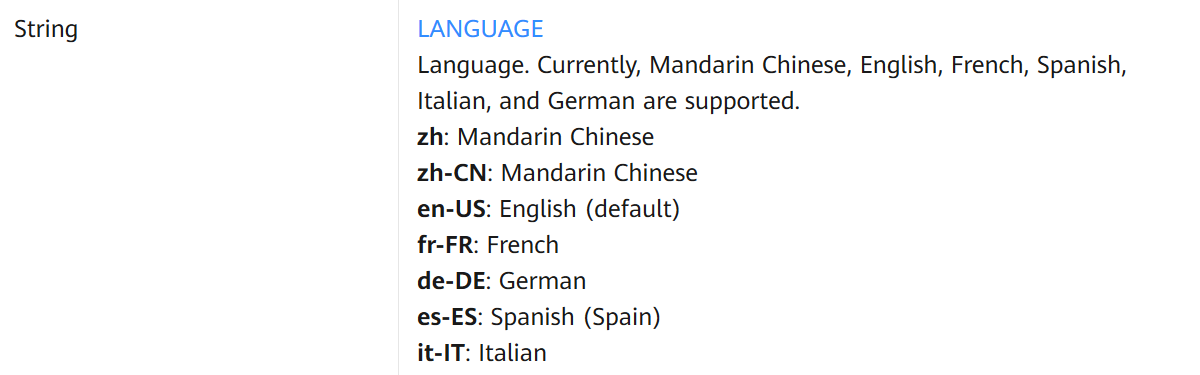

- Recognizes Mandarin Chinese (including Chinese-English bilingual speech), English, French, German, Spanish, and Italian

- Provides real-time result output

- Offers with and without speech pickup UI options

- Detects start and endpoints of your speech automatically

- Prevents silent parts to be sent via voice packets

- Intelligent conversion to digital formats: For example, the year 2020 can be recognized from voice input

Some languages are not supported in some regions. So, you should look out for that below.

### Limits

- Only available on Huawei phones

- Requires internet to use speech recognition capability

I believe, these limitations would be history after a short time. Because Huawei Core team is still working on ML Kit to improve one or two things for each release. And that means you should expect to see more in the short future. That also means more edit for this article 😅.

The test app contains two different methods that can be triggered via radio buttons. The first option is to trigger with Huawei’s default way. That option starts a custom result activity that shows a nice-looking bottom dialog view like above in the ASR preview gif. And returns its result in onActivityResult(…) method that you should handle on your Activity/Fragment. I would like you to keep in mind that this option does not trigger event callbacks even though you attach one. The second option triggers event callbacks, unlike the first option. That means you could check and do stuff in the event callbacks as you like. For example; you might show some custom UI elements to your users in your own domain design or you might trigger some computational heavy process with a callback provided texts. This is all up to you. In my test app, I did play some start and end beeps to understand when it starts and finishes to record in the related callback events.

The workflow of ASR with speech pickup UI is like the following;

- Call

MLAsrRecognizer.createAsrRecognizer(context)to get created object from MLAsrRecognizer. As you would understand, MLAsrRecognizer is using Singleton design pattern to give you instantiated object. - Create an Intent that takes MLAsrCaptureActivity::class.java as the constructor parameter. Then put two extra key&value to use it. This part is common for both ASR usages. The first one is MLAsrConstants.LANGUAGE key which indicates the language. The values that can be used are in the following picture with bold styling.

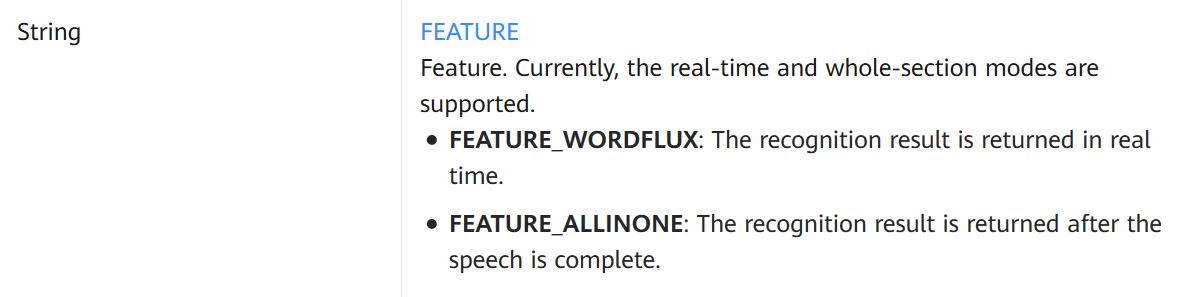

Second and last parameter is MLAsrCaptureConstants.FEATURE key which indicates which mode that we want to use ASR. There are only two modes like in the below.

- Then we start the intent with

startActivityForResult(intent, REQUEST_CODE)and handle the results inonActivityResult(…). Inside of it, we should first check ifrequestCodeis matching with ourREQUEST_CODEvariable. Then if theresultCodeis matching withMLAsrCaptureConstants.ASR_SUCCESS, means ASR picked it up some result from the device microphone. Finally, we can safely unwrap the Bundle’s data withextras().getString(MLAsrCaptureConstants.ASR_RESULT)which it should give us the result.

The workflow of ASR without speech pickup UI is like the following;

- Call

MLAsrRecognizer.createAsrRecognizer(context)to get created object from MLAsrRecognizer. As you would understand, MLAsrRecognizer is using Singleton design pattern to give you instantiated object. - Create your

MLAsrListenerobject to handle callback events. Then, assign callback withsetAsrListener(MLAsrListener)method of your MLAsrListener object. - Create an Intent that takes MLAsrConstants.ACTION_HMS_ASR_SPEECH constant as constructor parameter. Then you would add two key&value just like in the speech pickup UI workflow list above.

- Finally, call

startRecognizing(intent)method of MLAsrRecognizer object to handle results in the MLAsrListener object.

❌ I would like to mention that you would not need MLAsrListener callback in your project if you prefer to use it with Huawei’s speech pickup UI.

You could see the gists below to understand how things work in the concrete code examples. Fair warning ⚠️, I did remove some repeated and long code blocks in gists. That way, we could only focus on what matters most.

Finally, I would like to give you one last friendly reminder that you should release resources after you finish with detection or on a onBackPressed() event because that might corrupt your business logic or make your Activity/Fragment crash. Just call SpeechRecognizer’s destroy() better be safe than sorry.

## Test

Before you test it out, I would like you to remind you that you need to do some pre-work to run the test app. Because you would need to get some pretty tiny JSON file to sort things out to use the Huawei’s Kits on Huawei devices. You should read the following article about it.

⚠️ Each HMS Integration requires the same initial steps, to begin with. You could use this link to prepare your app before implementing features into it. Please, don’t skip this part. This is a mandatory phase. HMS Kits will not work as they should be without it.

After reading it, you should do one or two things to run the app. First, enable ML Kit under the Manage APIs tab on AppGallery Connect and should see the image below after enabling it.

Then, download the agconnect-services.json file that generated and place it under app directory. Finally learn your API Key under App Information section in General Information tab. And use it to initialize ML Kit like below in your Android Application class.

## Github Repository

That is it for this article. You could search for any question that comes to your mind via Huawei Developer Forum. And lastly, you can find lengthy detailed videos on Huawei Developers YouTube channel. These resources diversify learning channels and make things easy to pick and learn from a huge knowledge pool. In short, there is something for everybody here 😄. Please comment if you’ve any questions on your mind. Stay tuned for more HMS Development resources. Thanks for reading. Be safe, folks.